20 learning measurement ideas to show impact and improve performance

9 minute read

How do you know if your corporate elearning project is working? Measuring learning effectiveness and impact shouldn’t be cumbersome or a job in itself. It should be about doing just enough to prove the value of your learning programs, where it matters most. The time to measure learning, doesn’t have to be right at the ‘end’. Here are 20 learning measurement ideas that you can track – you may need just one!

Proving the value of your learning programs

Proving the effectiveness of online learning needn’t be a time-consuming, tricky task. In fact, putting together a solid learning measurement strategy is easier than you may think!

Let’s assume that as a learning manager you and your team enter your project with a clear grasp of the learning objectives, what success looks like, who the audience really are, and what’s going to make the biggest difference in order to see a performance improvement in those target areas. (You can get practical help with that in our project planning template.)

How do you then know if the learning project that you’ve commissioned or designed is actually helping with that goal? And, more importantly, how can you prove the value of your learning project in terms of business impact?

This becomes increasingly important when you’re working at scale, with multiple team members and Subject Matter Experts (SMEs) creating their own projects – assessing consistent quality levels and impact across the board is crucial.

Learning data – it doesn’t have to be big, it doesn’t have to be scary, and it’s probably at your fingertips.

Rather than thinking of online learning evaluation as something you do at the ‘end’ of your project (if there is a hard end to performance improvement and development?), here we share 20 ideas for learning measurement that you can track before content development, during development, and after launch.

Getting a learning measurement process in place throughout the life of a project is key. It’s also vital to remember that these measurement approaches aren’t just about hard stats and learning analytics – they are people-centered because that’s who you’re working for!

“You have more data than you think, and you need less than you think”.

Douglas Hubbard (inventor of Applied Information Economics)

The good news is, you probably only need two or three stats, carefully chosen, combined and compared to show a correlation between your corporate elearning and added value. If you’re worried about how to show the value of your online learning if it sits amongst a blend of other learning, performance aids and business initiatives, read on for ways to draw correlations.

5 ways to measure performance improvements

If you’ve done your homework upfront and captured your project goals and success measures, this part should be fairly obvious. In essence, if your online learning project aims to increase sales, drive up customer ratings, reduce errors, increase retention, improve Glassdoor reviews, or whatever the reason it deserves to exist – that’s what you need to track! But don’t do it alone! Here are some measurement approaches learning managers should explore:

1. Self-measures

Ask users to evaluate themselves against certain success factors and plot where they feel they need to improve. Then ask them to track their performance against these goals during and after they’ve taken part in your project. There’s plenty of survey tools out there to help, or consider building it into part of your digital content.

2. Peer measures

Get peers to evaluate one another and help provide evidence-based feedback on how a teammate is performing against their measures.

3. Manager measures

The key to the success of employee engagement and the success of your learning project is managers. Involve them in the evidence-based evaluation of their team, to help you track improvements.

4. Team evaluation

A slightly softer take on the above, but people-centered learning doesn’t just mean looking at individuals. Often, setting a team a goal and tracking their improvement helps boost performance. Monitor both the improvement by certain teams on their hard stats (see below) – and have teams self-assess their performance and peer-assess other teams’ performance in key areas too.

5. Hard stats

This is where you need to get a bit SMART. Start with a benchmark – understand what the current measure shows and look to increase it by a certain percentage.

Example: The goal is to ‘increase efficiency’ in a customer support team

Your hard stat measure may be to reduce customer response time by 20% by Q4 (you have to know where it’s at now). To help that team get more efficient, you need to know where the team’s time is going. Observations, time-sheet data, and self-reflective team, peer and self-evaluations and surveys will help you map out further goals i.e. too much time is being spent doing X – that needs to be reduced (the target may be to tighten a process or reduce length of internal meetings).

5 ways to measure if your content or learning program is working

You’re tracking performance improvements through soft and hard evaluations, but how do you know if your project – whether built by yourself, the wider L&D team, or SMEs – is making a difference to that? You want to be tracking a correlation between the learning you’ve invested in and your audiences’ performance, right? Here’s how:

1. Survey users

You’re trying to get a bunch of people to get better at something that adds value. So ask them for feedback on whether the online learning resources and experiences you provide are helping them get there, and what else is helping them too. Drop in a simple and quick user survey at the end of each piece of content.

2. Track usage

Are you engaging the number of users you’d expected to? (If not – see below for other measures to help you find out why). Which pieces of content are getting the most users? Use this data alongside some performance tracking to see if you can spot any trends.

3. Track sessions

Are users coming back to the same pieces of content time and time again? If you’ve designed performance support resources to be used on the job, this would be a key success factor to track.

4. Track shares

Which pieces of content are users sharing with others? Monitoring what is getting traction, virally and on social platforms, is a great indicator of success. If one of your goals is around employee engagement or creating a social ‘buzz’, this helps you spot success.

5. Spot spikes

Can you see spikes in social activity, performance improvement data or performance evaluations – self, peer or by a manager? Do they map to moments when content was launched or got a lot of hits? What else helped drive these? (Use your surveys to help you.)

Example: The goal is to ‘increase efficiency’ in a customer support team

In a two week period there’s a big shift in the team, who respond to more customer queries and spend less downtime than ever before. Looking at the content data, two out of ten pieces of online learning content got particularly high usage and high user ratings the week before and during these two weeks. Meeting time also dropped. These two components have made a proven difference. Measurement should now turn to looking at longer term impacts.

5 micro measures that help you see why certain learning content is or isn’t working

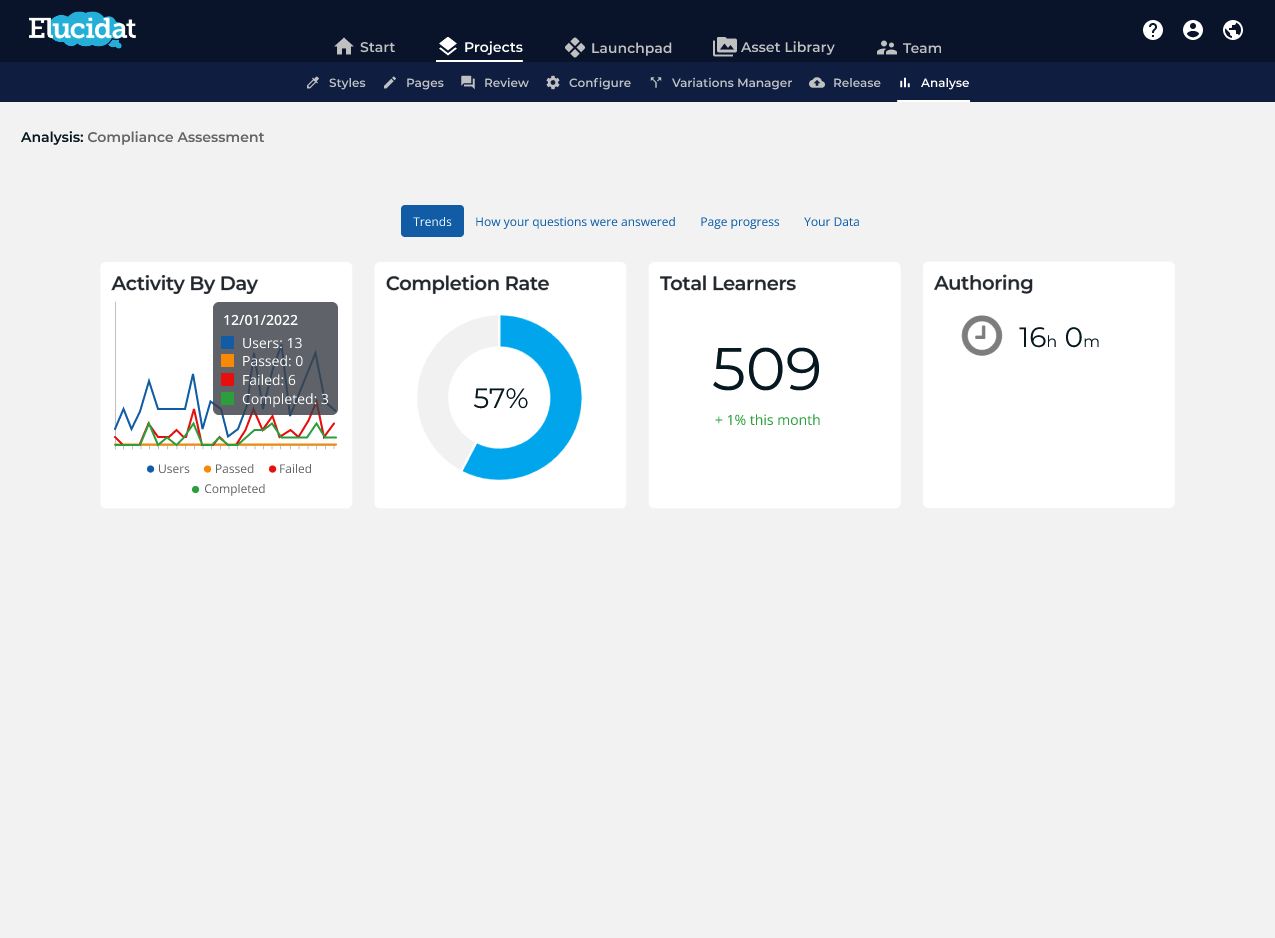

Learning analytics is pretty easy to get your hands on these days and should form an important part of any learning manager’s toolkit. From web stats like Google Analytics, to LMS data, through to in-built dashboards in your platforms that present live usage data, simply. If you want to know why a certain element of your project is or isn’t performing well in the grand scheme of things, take a look at these:

1. Most/least popular pages

Which particular pages are getting the most hits? (And if you have time or are keen to build on your successes, what do they have in common?)

2. Drop off points

Where are users dropping off? If you’re losing your audience, suss out where, and then look into why. Is the content not relevant? Is there a UX issue? (Is it dull!) Look to your user surveys or just ask a couple of people!

3. Questions or challenges

Look at your questions and how users are answering/scoring on those. Are your challenges too hard, too easy? Is this throwing people off and making them less useful for people?

4. Usage by location

Most dashboards will show you usage stats by location. Is a target location lagging behind another? Investigate why and intervene to increase your stats.

5. Devices used

Did you expect your content to be used on the fly, on mobiles, in short sessions, yet most users are accessing it via desktop (for longer periods of time)? Is this negatively affecting their experience or just a surprise to you? Consider if you need to realign your design or where and when you’re launching your content.

Take a look at the top 10 learning analytics to track.

The online learning content that had the highest usage and highest user ratings, and that correlated to an increase in customer responses, had a certain format, a consistent layout, and was accessed on all device types. But content that didn’t do as well were longer-form and didn’t perform as well on mobiles – users dropped off on around page 3 of 10. User feedback indicates its usefulness was rated lower. You can feed this into improving the design and reformatting these to support people on the job, further down the line.

5 ways to measure if your design concept is right, in the first place

Rolling right back to the start of your project, hopefully, since your project has a clear objective and a problem to fix, you started with some concepts and prototypes to test out with your users. Here are five ideas for measuring if your concept is right. HINT: start by looking at the data from your last project!

You could try A/B testing:

- Different lengths – of videos, audio clips, resources, pages, topics, interactive challenges and so on.

- Formats – what works best? See point 1 for ideas!

- Roll out times and communication strategies – experiment with different roll out times and comms methodologies to see what gets you the most user hits.

- Different platforms – find out where your content performs best, consider LMSs, learning portals and email drops/yammer feeds that link to standalone content

- And run surveys and user groups – yet again capture user feedback to help you gauge what your audience say they prefer, how they feel about the different options you present, and tally this up with some of the stats you’ll get via your data dashboards. Whilst this is different to performance evaluation, you can’t improve performance if your content isn’t engaging your users in the first place. So check it hits the mark.

Example: The goal is to ‘increase efficiency’ in a customer support team

Data from previous projects showed that launching content on Monday and Tuesday mornings got the highest number of hits. They could also see that video length was best kept <30 seconds and a combination of on the job short resources alongside deeper, self-assessment approaches were most helpful. Applying these insights to the new project, and trialling ideas out with the team in user tests showed that the format worked, but a more modern, direct tone and styling was preferred and would encourage them to share on social platforms.

Taken from ‘An everyday guide to learning analytics’ by Elucidat & Lori-Niles Hofmann

Do you need all of these learning measurements?

While we’ve given you 20 ideas, don’t worry, you won’t need them all to prove the value of your projects! Pick a few from the list that when combined will help you track success and prove the value of your investment. Involving your end users and respecting their feedback is just as important a metric as those hard stats, which, let’s face it, can make us all lose sleep – especially if you’re going for people-centered elearning.

A bonus of using these learning measurements is that they’ll also provide you with a useful comparison of how elearning created by more novice authors, like SMEs, is shaping up, so you can better support them with creating impactful content.

Ready for even more best practice tips? Check out this elearning best practice guide to engaging elearning: